Unit Testing Blender Scripts and Addons with Node.js

Lately I’ve been working on automating the more tedious bits of my asset creation pipeline and while doing so I’ve been picking up a bit of Blender scripting.

Everything that you can do by hand in Blender can be done via API, so it’s been very fun being able to turn manual tasks into scripts without much headache.

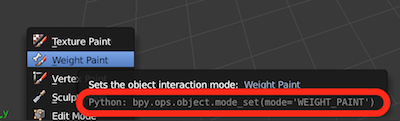

Mousing over anything in Blender reveals its API

Mousing over anything in Blender reveals its API

When I first started Blender scripting I tried to find some open source libraries to use as example references. Unfortunately none of the ones that I found were unit tested, so I didn’t have any immediate examples on best practices.

Now that I’ve written some of my own Blender scripts I want to quickly share two Blender script unit testing approaches that I took while working on blender-iks-to-fks and blender-actions-to-json.

I use Node.js and tape to run my tests, but the two approaches that we’ll discuss should transfer over to your language and tools of choice.

Two types of tests

Most Blender scripts fall into two buckets:

Bucket one is scripts that export data from Blender to the user’s filesystem. An example of this type of script is one that exports a JSON file with the names of all of the objects in the scene.

Bucket number two is scripts that modify or create visuals. An example of this is a script that creates and places a hat on anything that it believes is a head.

Depending on the nature of the script, you can either

-

Export the relevant data to a file, read that file in your test runner and verify that it has the contents that you expect.

-

Render an image of your Blender view and then use imagemagick to verify that the rendered image matches what you expect it to look like.

Data tests

Your data tests will boil down to something like this pseudocode:

var cp = require('child_process')

var fs = require('fs')

test('Exports a list of objects', function (t) {

// Spawn headless blender in a child process

cp.exec(

'blender model.blend -b -P my-script.py -- ./script-output-file.txt',

function (err, stdout, stderr) {

// Read the data that your script wrote to the file system

var output = fs.readFileSync('./script-output-file.json')

// Verify that your scripts output matches what you expect

t.deepEqual(output, expectedOutput)

}

})

Blender has a CLI that allows you to run a headless instance of Blender and then run scripts from inside of this Blender instance.

So you spawn a child process that runs Blender’s CLI and your script in the background. You then write your script’s output to a file and then verify that you got what you expected.

The python addon or script that you’re testing might look something like this:

# In my-script.py

# Do a bunch of stuff

# Create a list of objects that we'll

# be exporting to a file

outputFileContents = 'mesh1, mesh2, mesh3'

# Write to the filesystem

argv = sys.argv

# Get all args after `--`

argv = argv[argv.index('--') + 1:]

file = argv[0] # script-output-file.txt

with open(file, 'w') as outputFile:

outputFile.write(outputFileContents)

Visual tests

Visual testing involves rendering your Blender scene and verifying that the rendering matches an image that you have that you know is correct (usually by manually verifying it once).

Technically speaking every visual that you create in Blender is backed by bytes of data that you have full access to. So while anything can can be a data test, sometimes it is easier to test by just comparing renderings.

For example, in blender-iks-to-fks I’m taking an IK rig and creating an FK rig that should have the same poses.

While I could write all of my vertex positions to a file and compare the before and after, here it was easier to just take a before and after pic, compare them and call it a day. This meant that I didn’t need to maintain code in my script that exported data that I would only use for testing.

I have written about a similar visual testing approach while visually testing WebGL components.

Visual tests look something like this:

cp.exec(

// `-o` means render output to an image

// `-f 10` means render frame number 10

// `-F PNG` means use the PNG image format

// `-noaudio` makes this work if you don't have

// an audio card on your machine (Travis CI)

'blender model.blend -b -o actual.png -f 10 -F PNG -noaudio,

function () {

cp.exec(

// Use imagemagick to test that your image is what you want

'compare -metric RMSE actual.png expected.png',

function (_, stdout, err) {

// Then read stdout to see get your image comparison

// results

}

)

}

)

You render your Blender view to a PNG, then test that PNG matches an expected PNG that you’ve commited.

If you’re expecting a perfect match, you should check stdout for 0. If a slight differene between the two images is acceptable, you can check stderr for the root mean square error, which is a number that represents how different the images are.

Nice!

And that wraps it up! Check out the two repos if you need some example code.

Also, scroll down and join my mailing list!

- CFN